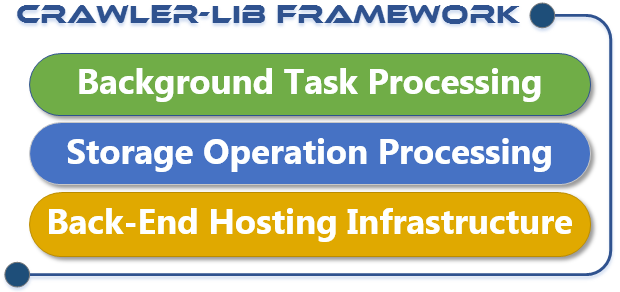

Crawler-Lib Framework

As the name states, the Crawler-Lib Framework has begun as a simple web crawler library. It has evolved over information retrieval and data mining to a generalized, full-blown application and service back-end framework. It aims to cover all problematic parts of back-end programming so that the developer can concentrate on the business logic. Developed in C# for the Microsoft .NET Framework and the Xamarin Mono platform it provides the necessary infrastructure to host the back-end in a Windows Service or Linux Demon.

Background Task Processing

Background processing some tasks is a major issue for application and service back-ends. Gathering web pages, gathering social media data (like tweets and posts,…), sending e-mail, queuing services, processing data (images, XML, HTML,…), transforming data, sending data(to services, FTP servers,..) are typical jobs that back-ends need to perform.

Evolved from crawling and scraping over information retrieval and data mining, the Crawler-Lib Engine has become a generalized workflow enabled processor for background task processing.

Storage Operation Processing

Typical back-ends have a persistence layer. The experience with crawling and scraping has shown that massive arbitrary and parallel storage requests will overwhelm most databases. This leads to high memory footprint and poor performance. This is even true for web application back-ends when the web server gets under heavy load. Hundreds of parallel requests flood the database server.

Crawler-Lib provides a storage operation processor where storage operations will be coordinated and optimized for the underlying storage. It is kind of a gateway where the operations are queued and executed in a degree of parallelism that performs best. E.g. there are 200 web requests in the queue, but only 10 will be executed in parallel because this is the optimum for a certain database. Operations in the queue can be reordered to keep the front-end responsive while background operations have to wait for idle. Several other optimizations can be done to get a smaller memory footprint and better performance.

Back-End Hosting Infrastructure

Back-end hosting is one of the annoying things in back-end development. Flexibility and robustness is needed. It should be able to host the back-end on Windows or Linux or in the cloud. The back-end should be able to start worker processes (like the Microsoft Internet Information Server) and monitor them to recover from failure. The back-end should be modularized to split up a monolithic service or daemon in maintainable modules. These modules should be easily testable. And the back-end should provide an easy way to communicate with various front-ends.

The Crawler-Lib Framework brings all this features in its infrastructure components for flexible back-end hosting. These back-ends can use desktop and mobile applications, web servers (like IIS and Apache) and any kind of exiting content management or web shop system (WordPress, Joomla,…) as front-end due to the communication abilities of the .NET Framework (WCF, REST, TCP, …).